U.S. News & World Report (USNWP) recently released its newest iteration of “Best Hospitals 2014-15.” It did not take long for hospitals around the country to begin to use that newsmagazine’s endorsement in their marketing materials, including offering licensing and advertising fees to USNWP for the privilege. Readers of this health policy series of are aware of my unaltered position on the importance of transparency and accountability in our national system of medical care. However, you should also be aware of my increasing skepticism that existing attempts to distill complex clinical or medical financial information into simple icons or letter grades to represent safe or quality medical care are not yet ready for prime time. Indeed, by themselves, such “ratings” can be unhelpful or even misleading in assisting an individual to select a hospital for a specific need. In Kentucky we have seen previously highly-rated services shut down when the facts on the ground were revealed.

Background.

In several earlier articles, I made efforts to look at the data underlying hospital safety and quality rankings proposed by a number of credible organizations. Even though these private agencies largely use the same data sets from Medicare, other federal agencies, and the American Hospital Association (AHA), I was troubled by the fact that different rating organizations gave different grades to the same hospital for the same interval, and that the same hospital would be graded very differently by the same organization in subsequent years. The statistician in me was surprised by the nearly perfect self-reported scores by some hospitals. I was prepared to accept that my own perception (or prejudice) of what constitutes a good hospital might differ from an evidence-based evaluation but was surprised at how great the disparity could be! In addition, a great many (mostly small) hospitals where quality is also a concern are not even included in most evaluations simply because they do not have to report their data to Medicare!

Troubling also was my growing realization that the hospital-rating business is just that – a business. Hospitals may first have to pay-to-play, and then pay-to-use any ranking endorsements in their marketing. This of course is an economic impetus behind other ranking initiatives such as “Best Hamburger in Louisville.” For example, hospitals can pay to place an advertisement as a “featured sponsor” in the lists of hospitals generated on the USNWP website, or to use the “Best Hospital” logo in their own ads. USNWP gives hospitals no say on whether they are evaluated as a Best Hospital, but other agencies may give hospitals the option of paying a participation fee and using a proprietary survey form. In my opinion based on observations in Kentucky, paying-to-play can improve a hospital’s ranking. I certainly came to that conclusion– others might as well. Meaningful systems of comparison are compromised when different things are measured.

Because I had not looked previously in any depth at the USNWP ranking system, because it has been nationally prominent for some time, and because I wanted to know how to interpret advertisements placed by Kentucky hospitals based on its ratings, I dug into the methodology and numbers underlying the 2014-15 report. My opinion remains unchanged that such rankings by themselves provide insufficient grounds on which to base the choice of a hospital. I am not alone.

A massive computational challenge.

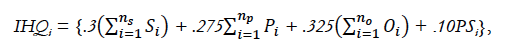

I must give USNWP credit for a valiant effort! It evaluates hospitals for each of 16 different medical/surgical subspecialties. It stuffs a frankly stupendous amount of information into four categories: Structure, Process, Outcomes, and Safety. In some ways, such as in its estimation of mortality, I am unaware of any organization that attempts to break down mortality following hospital admission for so many different disease states. “Will I get out alive” is probably the easiest-to-understand and arguably the most valued “outcome measure” for potential patients! USNWP (or its contractor RTI International) uses the MedPar database of all individual Medicare fee-for-service in-patient hospital discharges to make this calculation. The downside is that the statistical methods used to adjust for disease severity, normalization, and other manipulations are too complicated for mere mortals or even most medical professionals like me to follow, and are to my knowledge not-validated for this purpose. Here, as an illustration, is the formula used to weigh the four different categories of evaluation for each specialty:

What are potential issues?

USNWP has been criticized for its ranking methodology. For example, not every hospital is evaluated. Only hospitals associated with medical schools, teaching hospitals, or large hospitals with overflowing technology even make it to the starting line of ranking process. Only 2280 of 4743 adult acute-care community hospitals are considered at all. The Structure score comprises several reasonable-sounding individual elements such as patient volume, survival, nurse/patient ratios, Nurse Magnet recognition, advanced technologies, patient services, having a state designated trauma center within the hospital, or having a trained physician intensivist on the staff of at least one ICU. The Process score depends entirely on reputation generated from questionnaires distributed randomly to selected physicians. Reputation is something that can be bought as well as earned and is one of the subjective factors that evidence-based medicine is trying to get away from. USNWP justifies the use of reputation as a form of professional “peer review.” Outcome is based entirely on mortality in the first 30 days after hospital admission. The Safety component draws on the same set of mostly self-reported factors discussed in earlier articles. These latter two categories are based entirely on a subset of Medicare admissions which exposes the evaluation method to the expected criticism that it is not fully applicable to all medical admissions, all illnesses, or patients of all ages.

Several of the measured items are summed over a three-year period ending in 2012 and are thus outdated from the start. This summation moderates the impact of short term variance and of unusual but predictable events, but masks when a hospital is in ascendancy or in trouble. As with most if not all such ratings, the present report has trouble dealing with stand-alone hospitals, or those in systems of care. For example, Medicare collects its quality and safety data by provider number, not by individual hospital. From Medicare’s perspective there is only one Norton Hospital, and only one Jewish and St. Mary’s Hospital. We patients might wish to make our decision on a more granular level. A more technical description of the evaluation methodology can be found on the organization’s website. An appendix summarizing the various factors used for the evaluation is attached.

What should the USNWP recommendations be used for, if at all?

In its defense, USNWP is up-front that its ranking methodology has a limited defined purpose: “to identify the best medical centers for the most difficult patients—those whose illnesses pose unusual challenges because of underlying conditions, procedure difficulty or other medical issues that add risk.” It specifically advises that its recommendations are not meant to suggest that other hospitals cannot or do not provide excellent routine medical care closer to home. This stated intent can rationalize the focus on larger high-tech teaching hospitals that are more likely to have experience providing cutting-edge services. It does not make all potential criticism melt away.

Who makes the cut?

A global “Best Hospitals” score is derived from an aggregate score of all specialties. Additionally, for each of 16 different medical/surgical specialties, USNWP ranks the top 50 hospitals nationally with the highest composite specialty-specific numerical scores. The best hospitals are evaluated independently of cost or location. Additionally, the report identifies additional regional hospitals with “high-performing” specialty services. Hospitals with individual high-performing specialties are those in which the specialty-specific numeric score falls in the top quarter of evaluated hospitals. Secondary evaluations of hospitals with at least one high-performing specialty are considered to be best regional hospitals for that specialty but are not numerically ranked. Such a designation allows a hospital to use the silver “badge” of best regional hospital in their marketing of that specialty. However, hospitals in lists of regional hospitals from the USNWR website are sorted in an order other than their specialty-specific numeric score. (An example from Kentucky will be presented below.)

How did we do in Kentucky?

Sadly, for all the bloviating about world-class health care available in Kentucky, according to the criteria used by USNWR, there is not a single “nationally ranked” hospital in the Commonwealth for any specialty. Bummer! Alternatively, if our health care is as good as we say it is, the problem must be with the methods used to evaluate safety and quality, right? Which is it?

In terms of having hospitals that meet the standard for high performing specialties, we did marginally better. Nine Kentucky hospitals were recognized for having one or more such specialty services. They are given a numerical rank within the state by number of high performing services.

Best Regional Hospitals in Kentucky. (Name, #High-Performing Specialties)

#1. Baptist Health Lexington – 10

#2. Baptist Health Louisville – 9

#2. St. Elizabeth Edgewood – 9

#4. University of Kentucky Hospital – 7

#5. Norton Hospital – 3

#6. Jewish Hospital – 2

#7. Kings Daughters Medical Center – 1

#7. St. Elizabeth Florence – 1

#7. University of Louisville Hospital – 1

Who got what?

Eight of the above hospitals were high performing in pulmonology. (In our coal mining and hard-smoking state, we get a lot of practice!) Not a single hospital was high-performing in either ophthalmology, psychiatry, rehabilitation, or rheumatology. These last four specialties are evaluated by reputation alone, not by good works. For one important high-volume specialty, cardiology & heart surgery, only a single hospital achieved high-performing recognition – Jewish Hospital in Louisville. It was one of only 178 hospitals nationally to receive the high performing designation in that specialty. Jewish Hospital has long emphasized and marketed its status as a heart-hospital and is understandingly proud. That pride has expressed itself in a series of full-page newspaper ads proclaiming the status of “#1 Heart Hospital in the state,” and “the Best for Cardiology and Heart Surgery in Kentucky.” The silver badge for best regional hospital for cardiology and heart surgery is also displayed.

Getting our heads around the scores.

To frame the discussion above in a more concrete and I believe understandable way, I will use the example of Jewish Hospital’s designation in Cardiology & Heart Surgery. Nationally, 708 hospitals met the cardiology criteria of volume, reputation, and technology to be considered for national ranking. Jewish received a “U.S. News Score” in cardiology of 46.2. For comparison, the lowest cardiology score awarded to a national top-50 cardiology hospital was 57.2– that was for the University of Michigan Hospitals in Ann Arbor. When I queried the USNWR website for all hospitals in Kentucky evaluated for the specialty of cardiology and heart surgery, these 13 hospitals were returned in the following order.

1 Jewish Hospital

2 Baptist Health Lexington

3 Baptist Health Louisville

4 St. Elizabeth Edgewood

5 Unversity of Kentucky

6 Norton Hospital

7 Kings Daughters Medical Center

8 Lake Cumberland Regional

9 Lourdes

10 Medical Center of Bowling Green

11 Owensboro Health Regional

12 St. Joseph

13 Western Baptist

Jewish Hospital, the only designated high-performing hospital in cardiology in Kentucky, is understandably at the top of the list, but in keeping with the evaluation protocol, no discrete numerical ranking is given. This appears consistent with the USNWR’s policy that it provides a numeric ranking only to nationally-ranked hospitals.

What about the others?

Not obvious to the user is the fact that the remaining 12 hospitals are not listed in descending order of their US News Cardiology Score. It appears to me that Jewish, the only high-performing cardiac hospital, was at the top of the list based on its score. The next 6 were sorted by total number of other high-performing specialties, and the last 6 (those without any high-performing specialty at all) in alphabetic order. One hospital had a cardiology score of zero but was not at the bottom of the list! Indeed, that hospital appeared higher in the list than two hospitals whose cardiology scores were even better than hospitals with silver badges in other specialties that were placed higher up. In my opinion, an individual searching for cardiology expertise in Kentucky could be much misled.

What went into these scores?

Attached is a table of data extracted from the USNWR website for the 13 hospitals for which Cardiology & Heart Surgery data is presented, and in the order in which it is presented to the public. An appendix defining the specific criteria used to calculate the cardiology-specific U.S News Score is available here.

How did Jewish Hospital earn its score?

Jewish Hospital’s cardiology-specific score appears to be boosted by its very high cardiology patient volume, its better-than-expected survival rate, its number of advanced cardiology technologies, and by its claim to have an in-hospital, state-recognized trauma center of AHA Level I or II. By virtue of having a Kentucky certificate of need for transplantation, Jewish was able to claim 6 advanced cardiology technologies against a maximum of 4 for non-transplanting hospitals.

On the other hand, Jewish Hospital’s cardiology score on which its high performing status was based was diminished by very unfavorable numbers in other categories. Like all but one of the other thirteen, it did not receive a single vote for reputation. Its numbers for patient safety and nursing intensity were frankly among the very lowest in on the Kentucky list. Although not included in the aggregate cardiology score, the number of patients surveyed who would definitely recommend, or who would probably or definitely not recommend Jewish Hospital to their friends and family are the lowest and highest on the list respectively, save for the hospital that got a cardiology score of zero. Surely if Jewish had been able to score higher in these individual measures it could have been a contender for national ranking.

How bad were the others?

Its not like other hospitals in the state were potted plants in the cardiac hothouse. Norton Hospital had an identical patient volume and the same or better scores as Jewish in all but advanced technologies. Norton’s inability to provide transplantation apparently made the difference. The two Baptist system hospitals and St. Elizabeth Edgewood look pretty good on paper too with aggregate scores just below that of Jewish despite their inability to offer transplantation.

How would I use these rankings?

Would I go out-of-state to get my appendix taken out? Absolutely not! Furthermore, when and if I get my first heart attack, I would of necessity stay in Louisville and be guided by my primary care doctor; or if I had one, my cardiologist; or even end up wherever a diverted ambulance dropped me off.

Would I personally go to the Cleveland Clinic, Mayo Clinic, or my alma mater New York Presbyterian Hospital to have my aortic valve replaced? If my insurance company permitted, or if I could afford it (assuming I knew how much it would cost), I might well consider making the trip despite the inconvenience to me and my family. I have Medicare and can go anywhere. Those with narrower provider networks may need to select among Kentucky Hospitals for care.

What good are lists like these if the providers they rank highly are not financially or physically accessible to people when needed? I am in favor of funneling patients to the best places for them at the right time, as individuals, or by disease. I believe that experience and appropriate use of technology can make a difference in outcomes. I also believe that hyper-competition and overuse of technology are hurting us. It is only in a coordinated and cooperating health system that the potential benefits of ranking systems like the one discussed above can serve their greatest potential. Sadly, we do not have such a system.

My opinions are emboldened by a recent experience before an panel of experts on measuring the quality of medical care. My assertion that our current process “is not yet ready for prime time” was not judged to be far off the mark and was not dismissed. Despite this, major financial decisions are being made today by government and private payers of health services about who gets to do what to whom and for how much. Surely we as both patients and providers deserve to have full confidence that we are doing the right things!

As always, if I have made an error of fact or inappropriate interpretation, let me know.

Peter Hasselbacher, MD

President, KHPI

Emeritus Professor of Medicine, UofL

September 30, 2014

One thought on “U.S. News & World Report’s Best Hospitals— Do we have any in Kentucky?”

Comments are closed.